The Importance of RF Calibration of Test Equipment

With today’s high-resolution digital displays showing many digits on both sides of the decimal point, we tend to be lulled into a false sense of security about the accuracy of our test equipment. First, we need to understand the capabilities of our test instruments. Second, we must ensure they remain as accurate as they were when they left the manufacturer’s facility. Test equipment gets subjected to an extreme amount of wear and tear, especially if it is routinely transported to a variety of locations. Vibration, temperature fluctuations, and the degradation of connecting cables all contribute to the overall variance in measurement. The need for calibration of RF test equipment is perhaps more critical today than ever before.

Calibration serves three main purposes. First, it ensures that the readings obtained from an instrument are consistent with other measurements and a standard typically published by a government agency such as the National Institute of Standards and Technology (NIST) or the Department of Defense often referred to as a Mil-STD. Second, calibration determines the accuracy of the readings the test instrument is displaying — do the readings you are seeing on the display accurately represent the real world? Third, it ensures that the instrument can be trusted and that it is consistently reliable. These three items are critical with all test equipment and especially critical in the RF test equipment arena. The prevalence of RF devices at both the consumer level and the commercial/industrial level has led to a crowded RF spectrum and requires technicians working in these environments to have a high level of confidence in the test equipment they are using to trace and diagnose issues.

Calibration of RF measurement equipment takes on a new dimension when one considers all the sources of error or variation in the measurement process. Consider the calibration of a simple mercury or alcohol thermometer. After the thermometer is placed in the reference standard and a temperature is observed on the thermometer and given its deviation from the standard, an inference can be made on the accuracy of the thermometer. When put into use, the information from the calibration can easily help determine an accurate temperature. There are not a lot of variables involved in this situation. Consider that the use of RF test equipment can involve connections, cables, temperature, vibration and a variety of other variables. Calibration of the actual instrument is key to determining accurate results, but also is the evaluation of the other portions of the infrastructure required to connect the test instrument to the device under test (DUT) to determine where variance is introduced to the results displayed on the measurement equipment.

What is the correct calibration schedule for your equipment? That depends. Look at the environment you work in: is your test equipment transported throughout your facility or to other facilities? Are your technicians careful with the equipment or is it subjected to bumps, knocks, pulling on cables, and the occasional fall? Establish an annual or semi-annual calibration schedule, review the results over time, and adjust the schedule based on the information you receive.

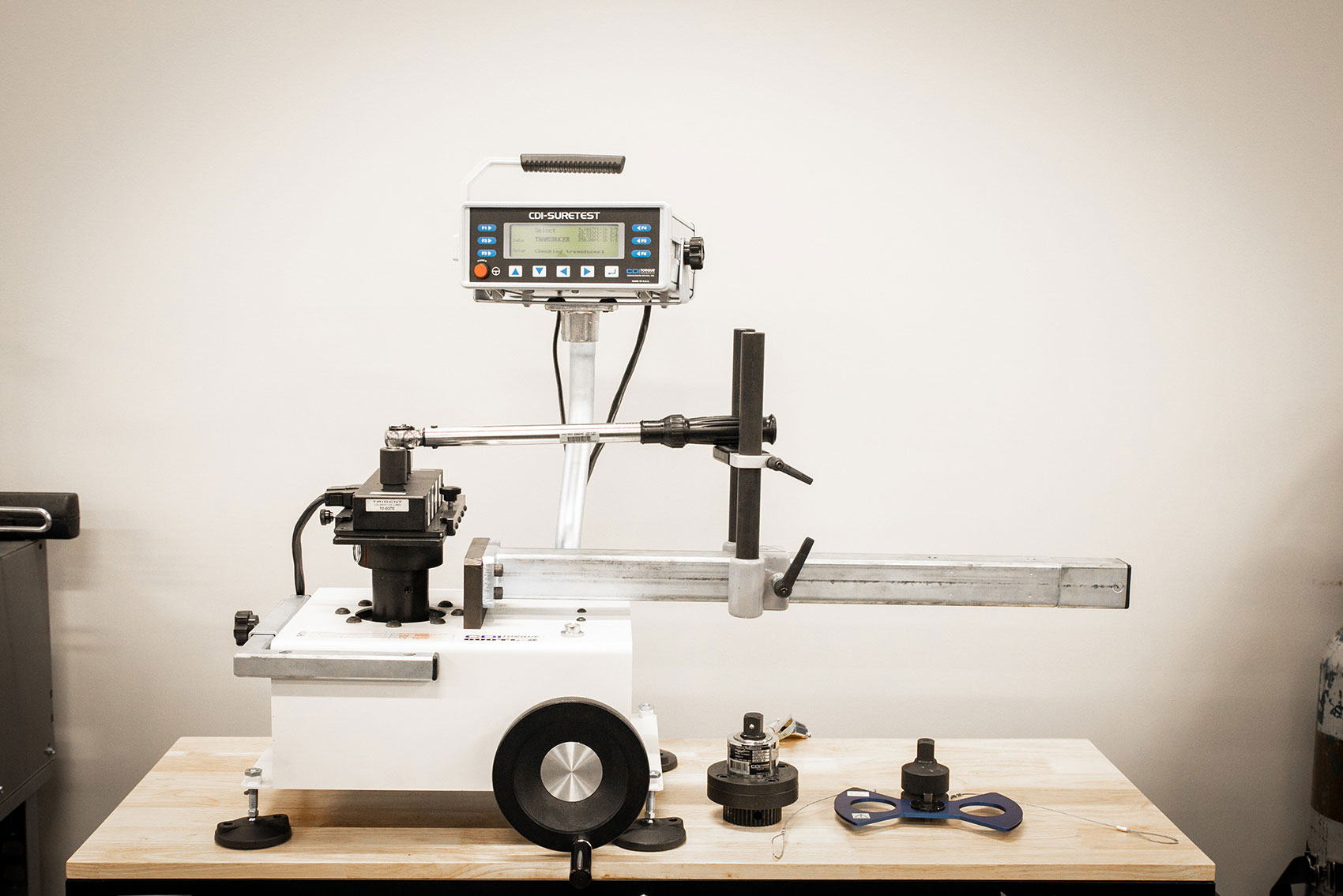

Trident Calibration Labs, with locations in Phoenix, Arizona and Los Angeles, California, can handle all your RF test equipment calibration needs in our ANAB ISO/IEC 17025 accredited labs.

continue reading

Related Posts

FAQs About Calibration Services For businesses in industries such as […]

Trident Calibration Labs Now Offering O2 Cleaning In the ever-evolving […]